How to Create a Personal AI Assistant with Ollama + NativeMind

NativeMind Team6 min read

🤔 What is Ollama?

Ollama is a tool that lets you run AI models on your own computer, as simple as installing any desktop app like Chrome. Once installed, you can:

- Use AI completely offline - chat without needing an internet connection

- Protect your privacy - all data stays on your computer and never gets uploaded to the cloud

- Use it for free - no paid subscriptions needed, install once and use forever

- Access multiple AI models - supports various AI models on par with ChatGPT

📋 Pre-Installation Requirements

System Requirements (macOS Example)

- macOS 11 Big Sur or later

- At least 16GB RAM (24GB+ recommended)

- Apple Silicon chips recommended (M1, M2, M3, etc. for better performance)

- At least 10GB available disk space

- Stable internet connection (only needed for downloading)

💡 Pro Tip

Just like running demanding games, AI models require substantial hardware resources. Macs with Apple Silicon chips run AI models most efficiently, while Intel-based Macs can still run them but with relatively slower performance.

🚀 Step 1: Download Ollama

1.1 Visit the Official Website

Open your browser and go to https://ollama.com

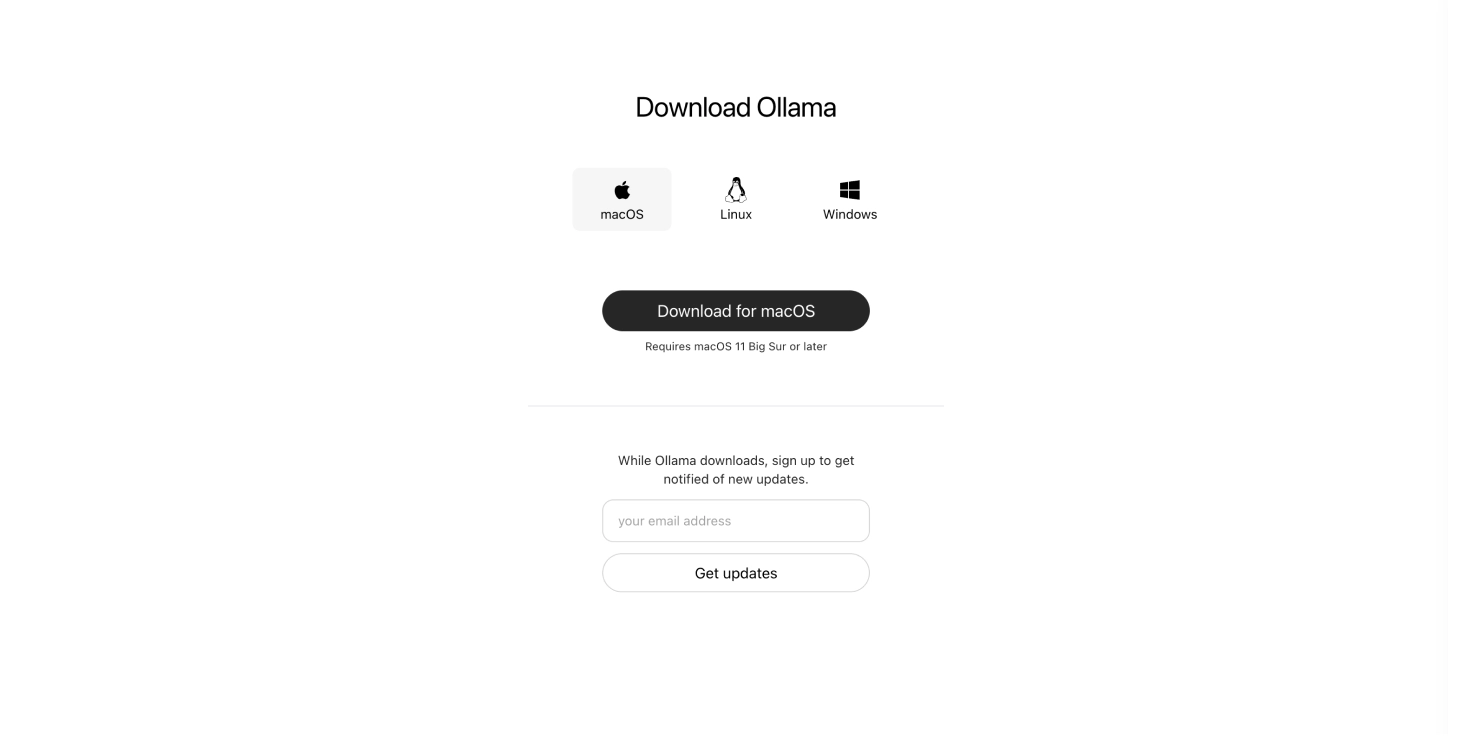

1.2 Download the macOS Version

The website will automatically detect your Mac system. Click the "Download for macOS" button to start downloading.

This will automatically download the installer package, approximately 180MB in size.

🔧 Step 2: Install Ollama

2.1 Open the Downloaded Installer

- Locate the downloaded file

- Usually found in your "Downloads" folder

- File name will be something like:

Ollama-darwin.zip

- Extract and double-click to run

- Double-click the zip file to extract automatically

- Double-click the extracted

Ollama.appfile

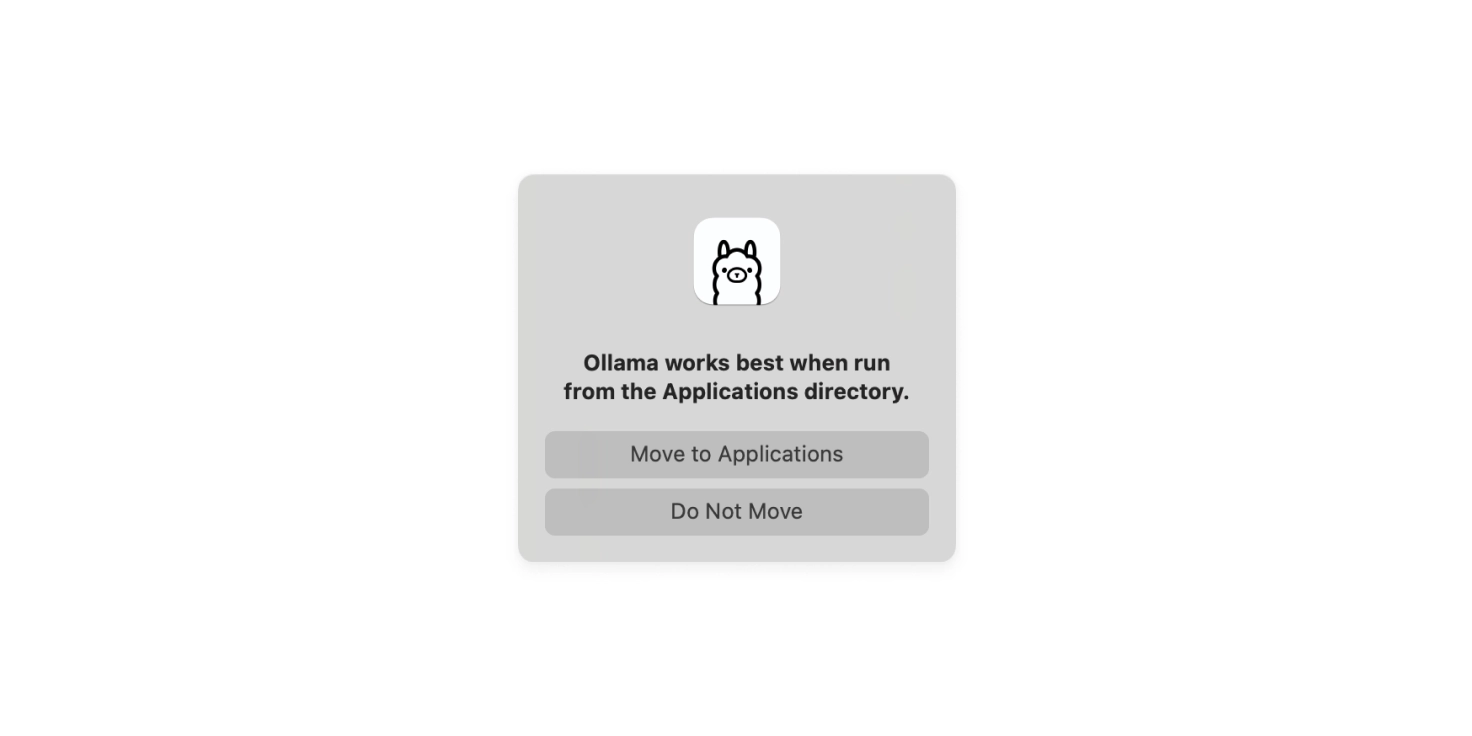

2.2 Move to Applications Folder

After double-clicking Ollama, you'll see a prompt: "Ollama works best when run from the Applications directory."

Please select "Move to Applications" - this ensures Ollama will function properly.

2.3 Ollama Initial Setup

After moving is complete, Ollama will display the welcome screen:

There will be three steps here:

- Click "Next" to continue

- Install command line tools

- Prepare to run your first model

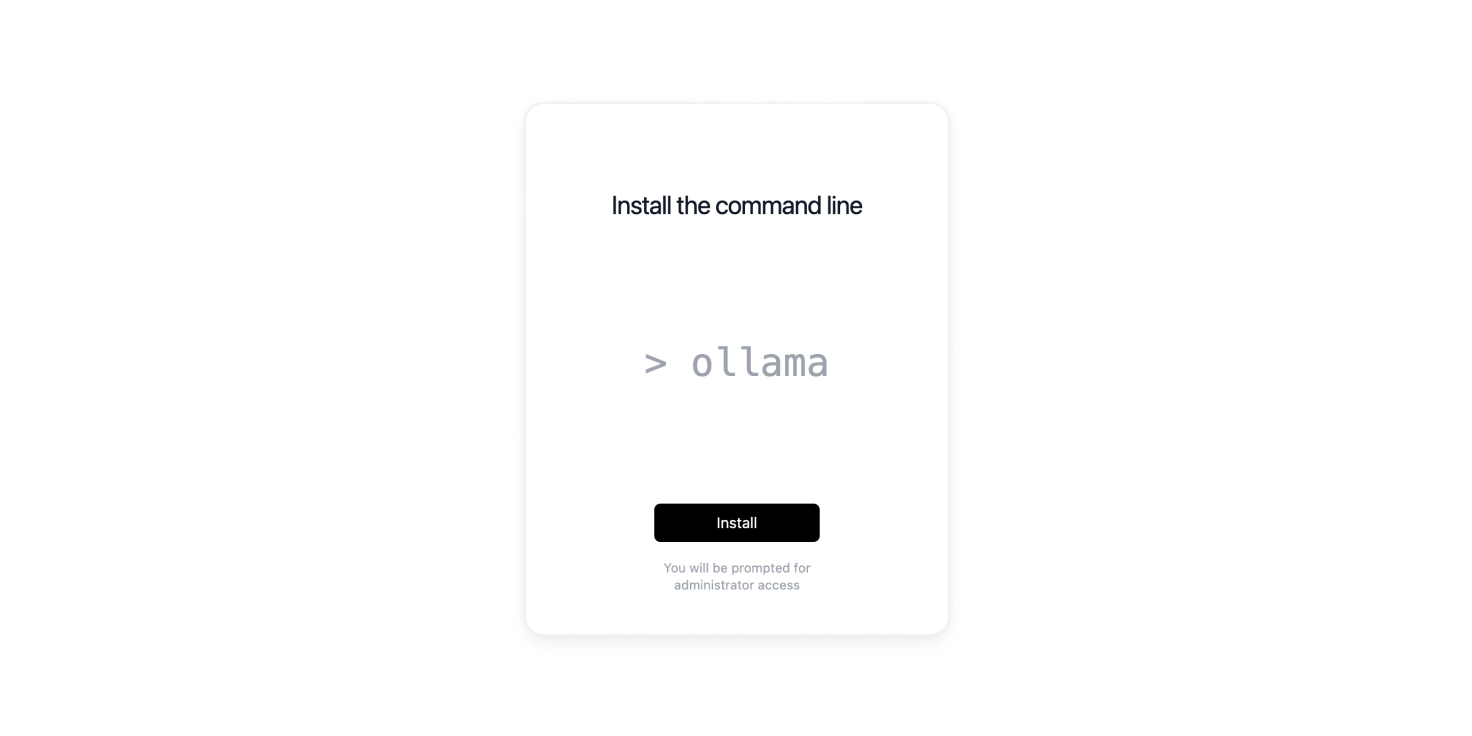

2.4 Install Command Line Tools

Next, you'll see the "Install the command line" interface:

Click the "Install" button, and the system will prompt you to:

- Enter your Mac's password (this is needed to install system-level components)

- After entering the password, the command line tools will be installed

2.5 Complete Installation

Finally, you'll see the "Run your first model" interface:

Seeing this interface means Ollama has been successfully installed! Click "Finish" to complete the installation.

🎯 Step 3: Download Your First AI Model in NativeMind

Now that Ollama is installed, you'll see the llama icon in the top-right corner of your Mac's menu bar. Next, we need to download an AI model through the NativeMind extension. Just like buying a gaming console still requires buying games, installing Ollama is only the first step.

3.1 Understanding Different Models

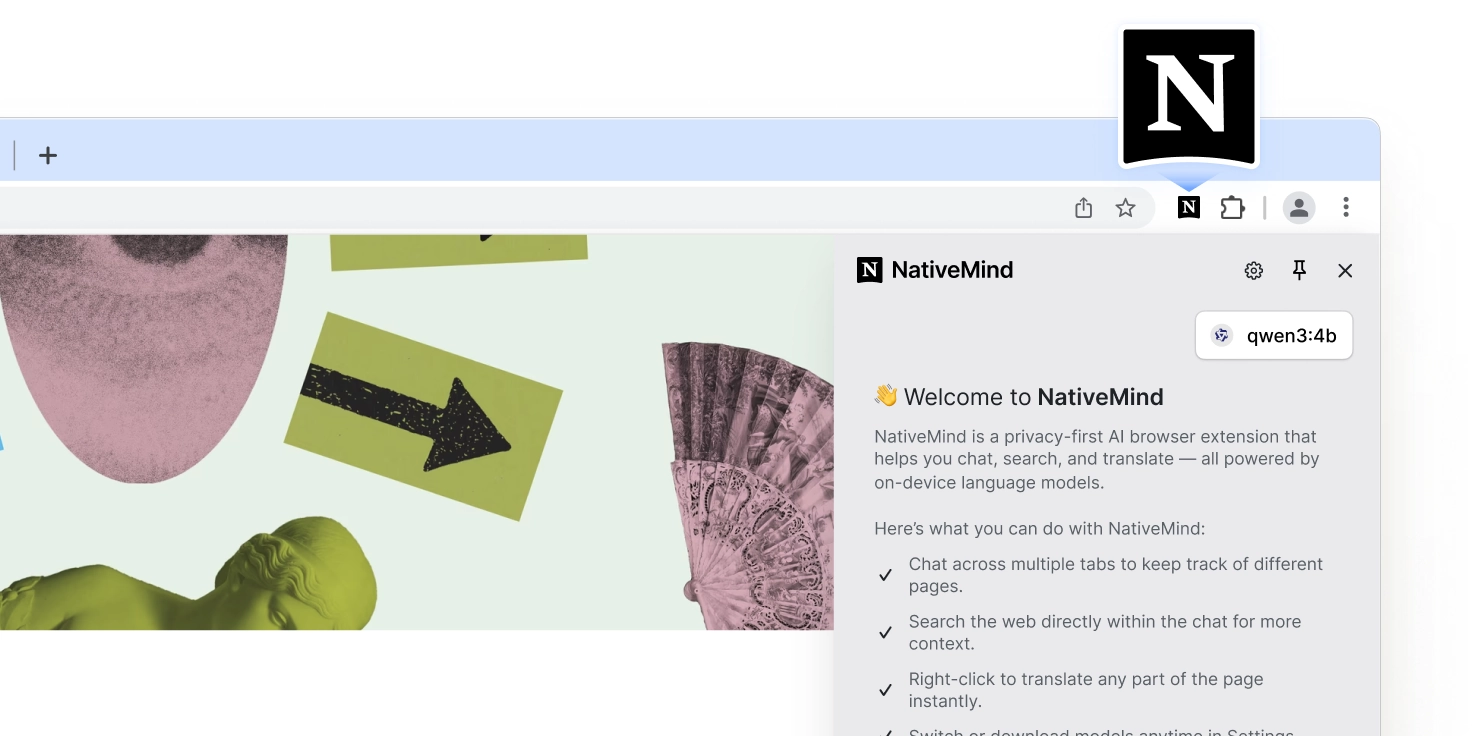

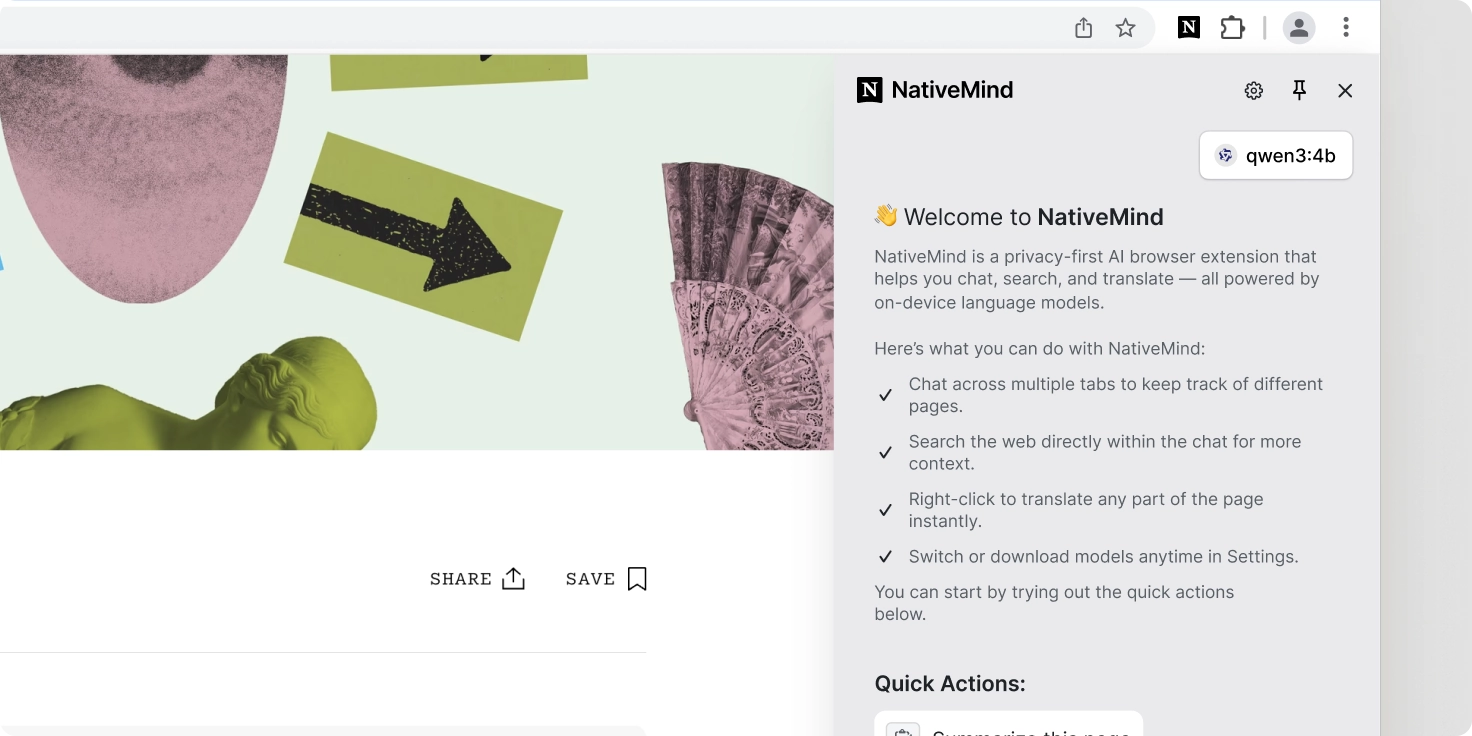

3.2 Open the NativeMind Extension

- Launch your browser (Chrome/Edge, etc.)

- Click the NativeMind extension icon (usually in the top-right corner of the browser)

- On first launch, it will automatically detect Ollama status

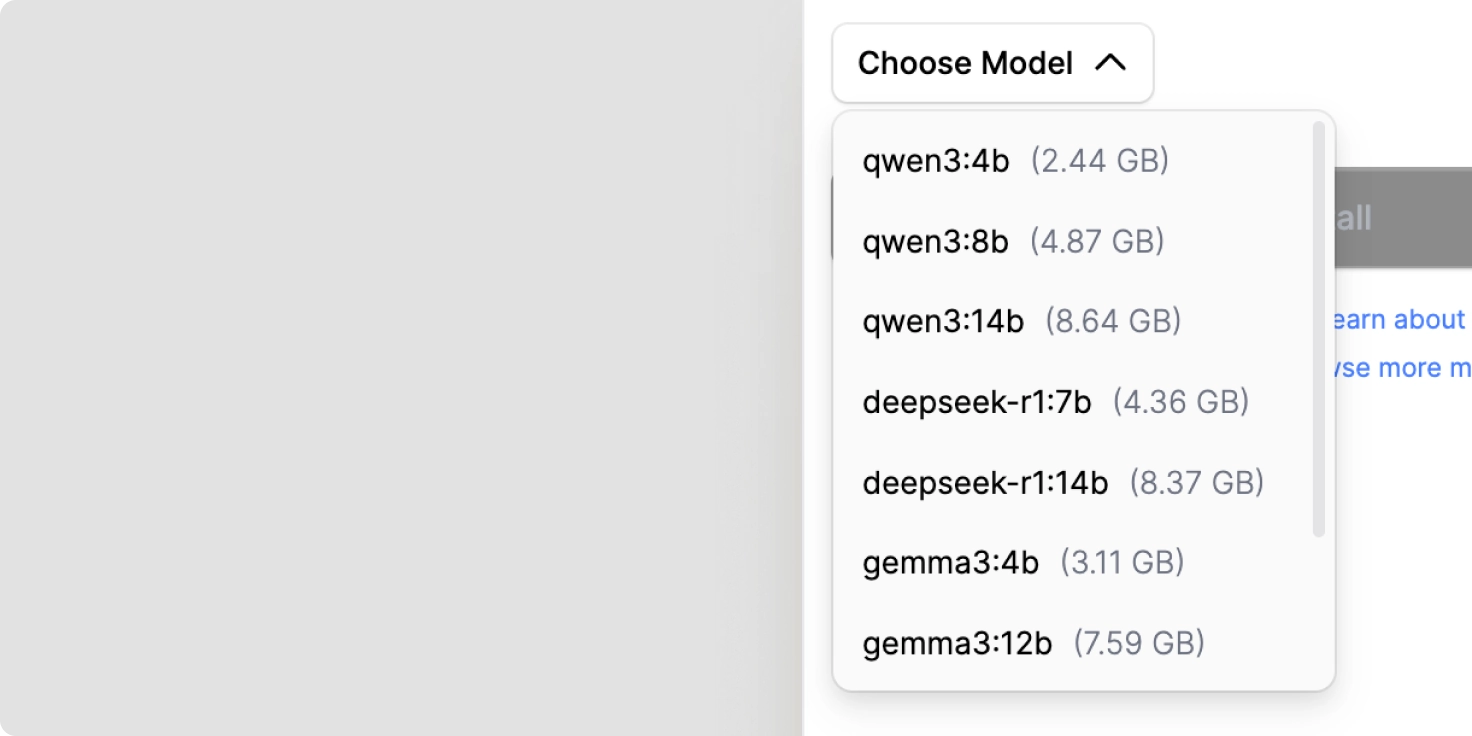

3.3 Select and Download a Model

When NativeMind detects that Ollama is installed, it will display the model selection interface:

If it doesn't detect Ollama, you can click the "Re-scan for Ollama" button in the extension to rescan and detect Ollama.

We strongly recommend choosing qwen3:4b:

- ✅ Small file size (2.6GB), quick download, immediate access to powerful local reasoning

- ✅ Alibaba's latest generation open-source large model

- ✅ Supports 119 languages including English, French, Spanish, German, Japanese, Korean, Chinese, and more

- ✅ Low memory usage (4GB), runs easily on 16GB RAM computers

- ✅ Amazing performance: 4B parameters can achieve the performance level of previous generation 72B models

- ✅ Powerful reasoning and translation capabilities

- ✅ Beginner-friendly

3.4 Start Download

- Select

qwen3:4bfrom the dropdown menu - Click the "Download & Install" button

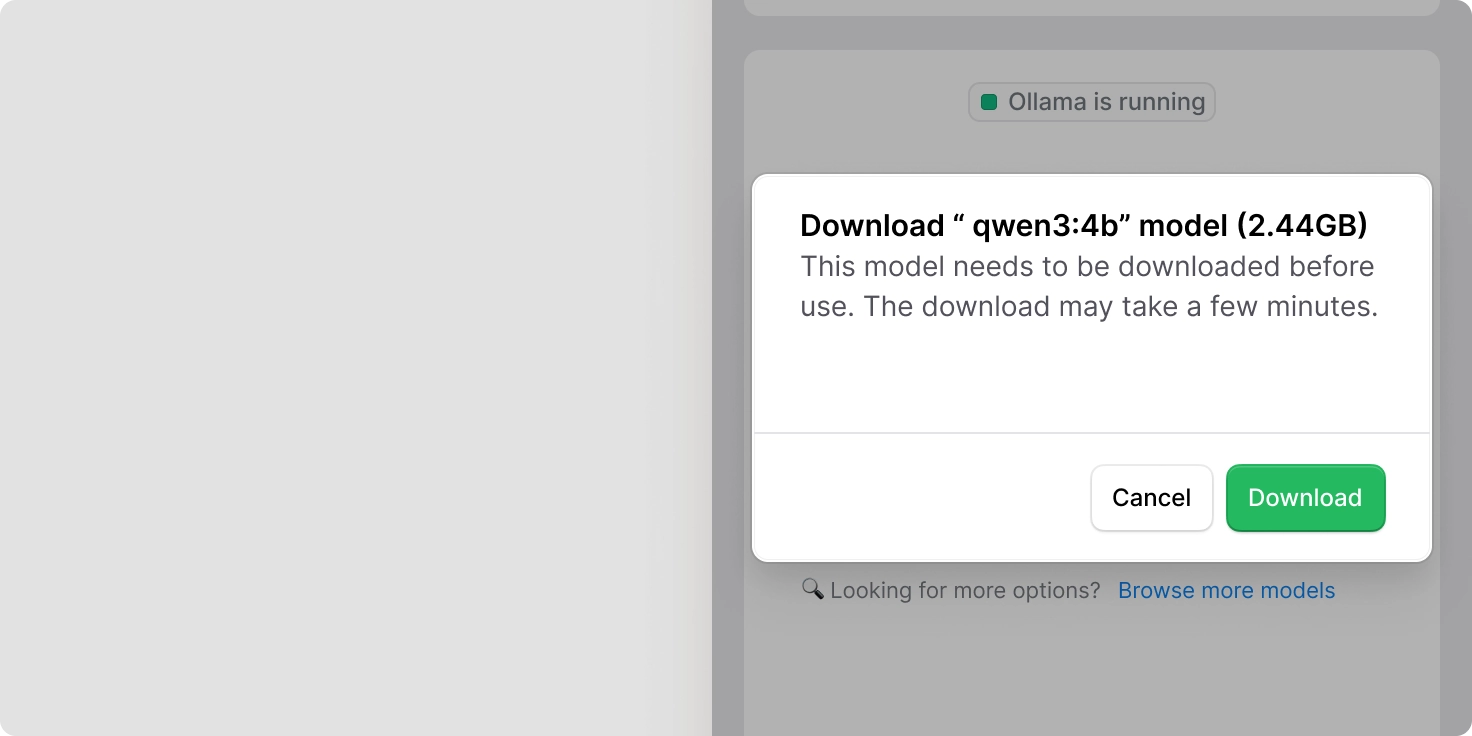

- Confirm the download popup

- Will display:

Download "qwen3:4b" Model (2.6GB) - Click "Download" to start downloading

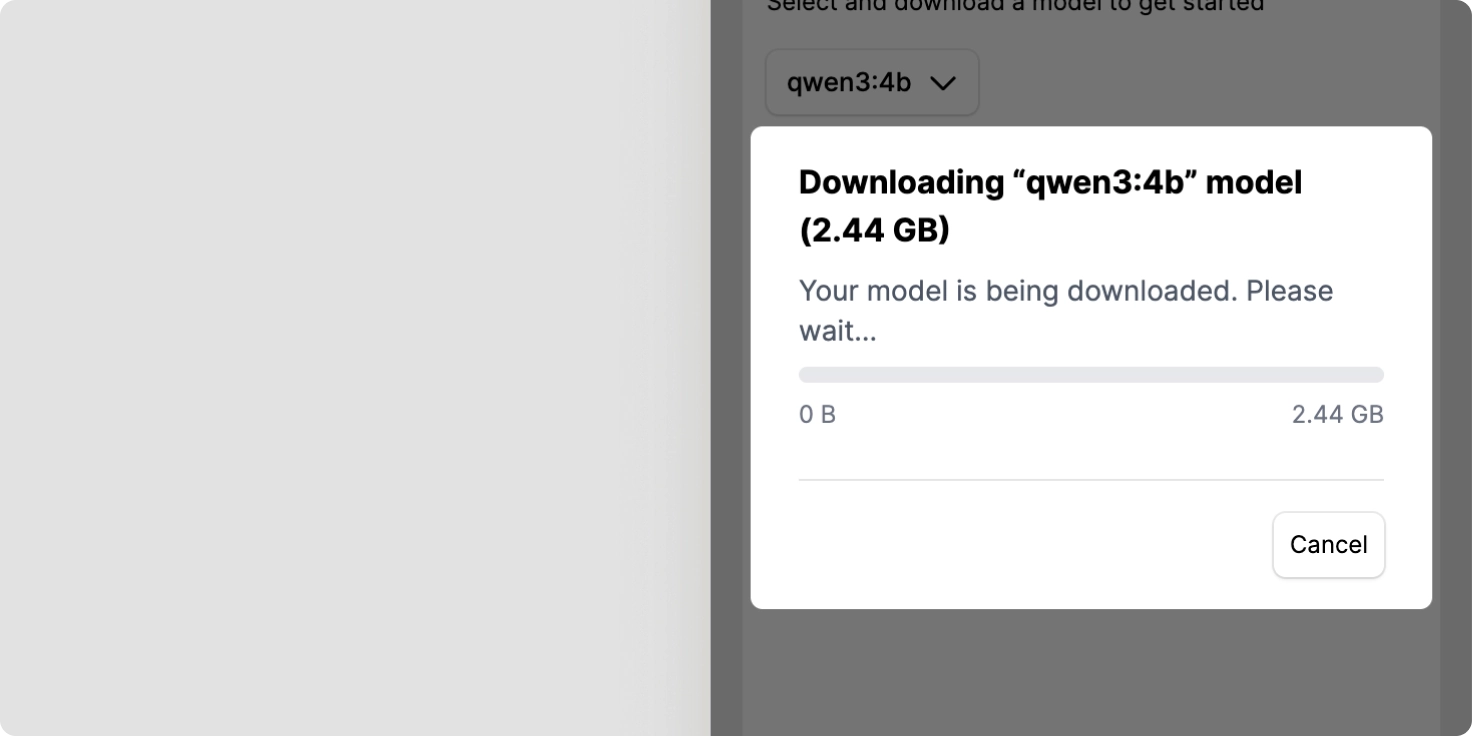

- Will display:

3.5 Wait for Download Completion

- Download time: Approximately 3-10 minutes (depending on internet speed)

- Progress display: Progress bar will show download status

- Cancellable: Click "Cancel" if you don't want to continue downloading

3.6 Download Complete

Once download is complete, NativeMind will automatically:

- Load the model

- Navigate to the chat interface

- Display welcome message

🔧 Troubleshooting Common Issues

Q1: "Cannot be opened because it is from an unidentified developer"

Solution:

- System Preferences → Security & Privacy

- Click "Open Anyway"

- Or hold Control key and click Ollama.app, then select "Open"

Q2: NativeMind shows "Ollama not detected"

Solution:

- Confirm Ollama is properly installed and moved to Applications folder

- Check if Ollama icon appears in the top-right menu bar

- Restart the Ollama application

- Click the "Re-scan for Ollama" button in NativeMind

Q3: Installation fails after entering password

Solution:

- Confirm you entered the correct Mac login password

- Ensure your account has administrator privileges

- Restart Ollama and try installing again

Q4: Mac runs slowly

Solution:

- Close other resource-intensive applications

- Choose a smaller model (recommend qwen3:4b)

- If you have 16GB RAM, consider upgrading to 24GB or more

- Check if Mac has sufficient storage space

- Slower performance on Intel-based Macs is normal

🎉 Installation Complete! What's Next?

🌟 Recommended Uses

- Webpage summarization: Intelligently summarize webpage content

- Chat with webpage: Deep understanding of page information, multi-tab support

- Web search integration: Combine with real-time search for latest information and answers

- Immersive translation: One-click webpage translation for immersive reading

- Intelligent writing assistant (Coming Soon): Rewrite, polish, and create various types of text